A/B testing

Also called split or bucket testing.

In this testing method two versions of a webpage/application are compared to identify which version performs better.

A/B testing is essentially an experiment where two or more variants of a page are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal.

A/B testing enables one to:

- Ask focused questions about changes to your webpage or application

- Collect data of the impact made by those changes made

- Make data-informed decisions shifting the business perspective from 'we think' to 'we know'

Types of A/B testing

- Classic A/B test. The classic A/B test presents users with two variations of your pages at the same URL. That way, you can compare two or several variations of the same element.

- Split tests or redirect tests. The split test redirects your traffic towards one or several distinct URLs. If you are hosting new pages on your server, this could be an effective approach.

- Multivariate or MVT test. Lastly, multivariate testing measures the impact of multiple changes on the same web page. For example, you can modify your banner, the color of your text, your presentation, and more.

In terms of technology, you can:

- Use A/B testing on websites. A/B testing on the web makes it possible to compare a version A and B of a page. After this, the results are analyzed according to predefined objectives—clicks, purchases, subscriptions, and so on.

- Use A/B testing for native mobile iPhone or Android applications. A/B testing is more complex with applications. This is because it is not possible to present two different versions once the application has been downloaded and deployed on a smartphone. Workarounds exist so that you can instantly update your application. You can easily modify your design and directly analyze the impact of this change.

- Use server-side A/B testing via APIs. An API is a programming interface that enables connection with an application for data exchange. APIs let you automatically create campaigns or variations from saved data.

A/B testing statistics

A/B testing is based on statistical methods There are two main statistical methods used by A/B testing solutions:

Frequentist approach This approach allows you to see the reliability of your results thanks to a confidence level: If this is at a level of 95% or more, you have a 95% chance of it being accurate. But this method has a downside. It has a "fixed horizon", meaning that the confidence level is valueless until the end of the test. Bayesian approach This approach provides a result probability when the test starts, so there is no need to wait until the end of the test to spot a trend and interpret the data. But this method also has challenges: You need to know how to read the estimated confidence interval given during the test. With every additional conversion, the trust in the probability of a reliable winning variant improves.

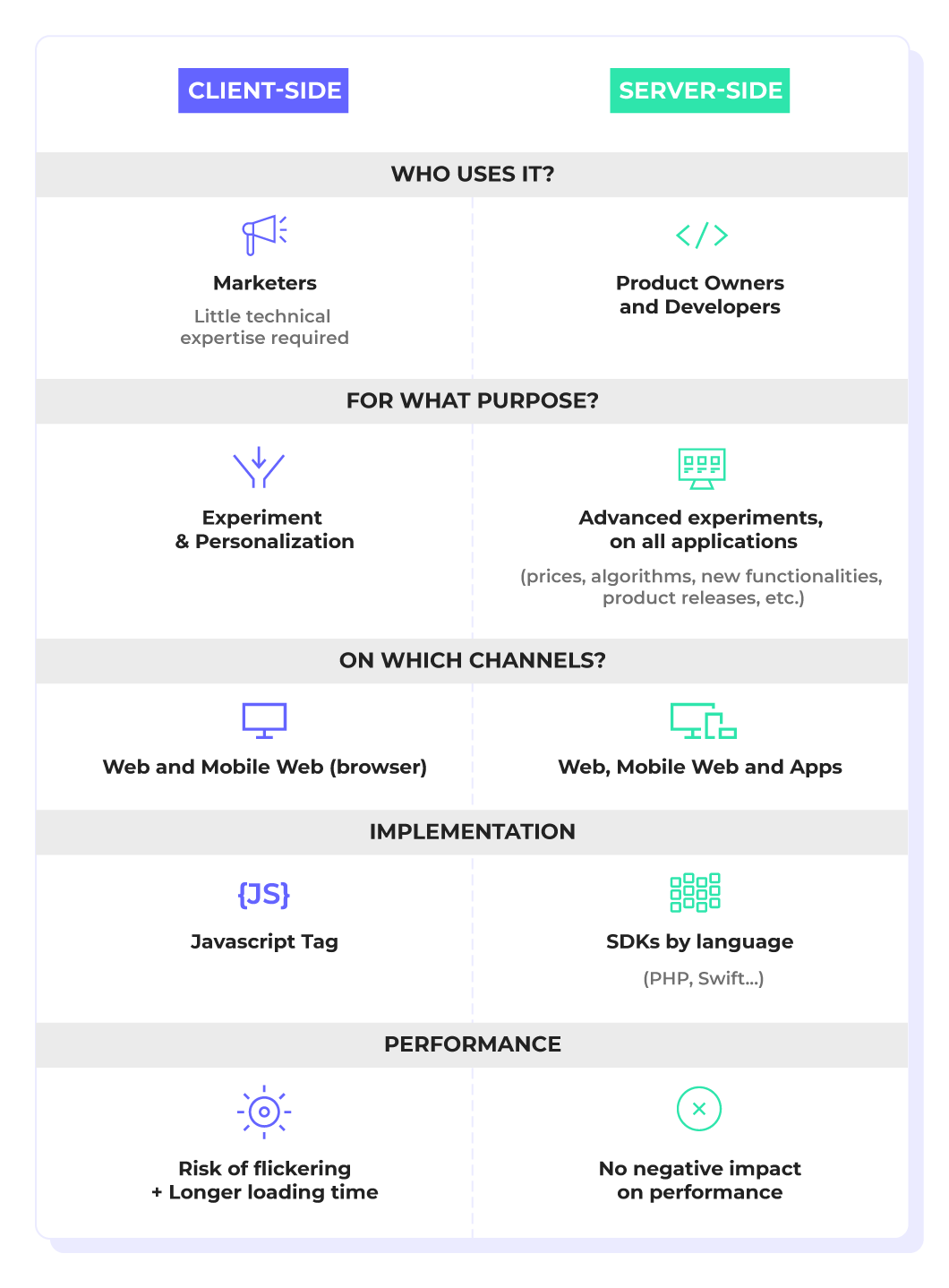

A/B testing : Full stack or client-side

The best approach to choose will depend on company structure, internal resources, the development life-cycle and the complexity of the experiments..

- Client-side experimentation and personalization does not require advanced technical skills, making it well-suited to digital marketers. It enables teams to be agile and to run experiments very quickly, avoiding bottlenecks and getting faster test results.

- Server-side testing approach requires technical resources and more complex developments, it does enable more powerful, scalable and flexible experimentation

Benefits of A/B testing

- Increased conversions

- Engage visitors around your brand

- Get to better know your visitors

- Make decisions from quantified results

- Optimize your time and budget

A/B testing mistakes

- Starting with overly complicated tests and not taking “quick wins” into account

- Not validating your hypotheses with insights on the behavior of your visitors before launching your tests

- Testing without a defined process or plan

- Launching your tests without prioritizing

- Optimizing the wrong KPIs

- Not testing continuously to draw lessons and understand the behavioral changes in your visitors